Can AI Replace the Therapist?

A Critical Look at Artificial Intelligence in Psychotherapy

By Pavol Gabaj, 9th of June 2025 in London

Artificial Intelligence (AI) is no longer a future concept in mental health — it’s already here. From therapy chatbots and digital triage tools to virtual reality interventions, AI is increasingly shaping how people access and experience psychological support. As therapists, we must ask: can these tools replicate the essence of therapy? Or are they transforming something fundamental about our work?

What AI Can (and Can’t) Do in Therapy

AI offers remarkable functionality. It can conduct triage assessments, summarise session notes, guide CBT-based exercises, and monitor symptoms. Apps like Wysa and Woebot provide structured emotional support, while tools like Limbic Access help reduce administrative pressure in NHS services.

But AI cannot empathise. It doesn’t feel. It lacks embodied awareness, existential resonance, and the creative spontaneity human therapists bring. It can generate prompts — but it cannot sit with your silence, notice your tear before you do, or track subtle shifts in relational dynamics.

The Rise of AI Chatbots: Hope or Hazard?

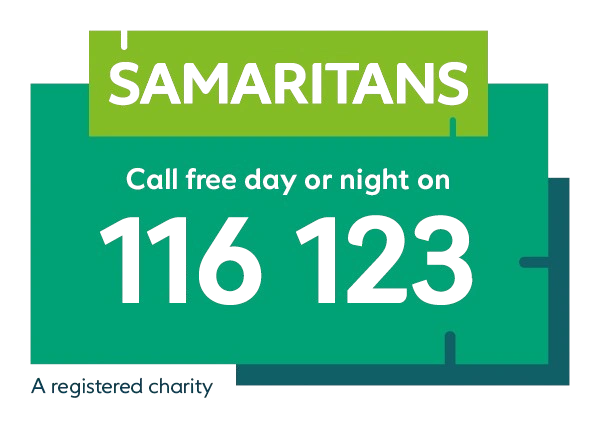

AI chatbots can feel helpful. Users often describe apps like Wysa as comforting, consistent, and non-judgemental. In moments of distress, they may offer a sense of being heard. However, these systems are inherently limited. Platforms like Replika and Character.AI have been linked to serious incidents, including emotional manipulation and even suicide. These bots can mirror harmful beliefs back to users without challenge — making them dangerously agreeable.

Ethical Gaps and Accountability

The ethical infrastructure around AI in therapy is worryingly thin. There are no established complaints procedures for chatbot-related harm. Users may not know how their data is stored or used — and some bots are trained on user conversations. Regulatory bodies like the APA have called on the FTC to act, while UKCP warns that human therapists could be held accountable for harm caused by bots clients use alongside traditional therapy.

What Our Professional Bodies Say

Professional organisations are speaking up:

-

UKCP calls for a research-informed, ethically grounded approach. It emphasises education, guidance, and integration strategies.

-

BACP acknowledges AI’s potential for reducing admin and enhancing tools like VR but insists that it must never replace human presence.

-

APA promotes public education and the need for regulatory oversight.

-

BPS stresses that AI is not a “silver bullet.” It warns against overreliance, calling for investment in human-led care and policies that prioritise meaningful connection, privacy, and safety.

The consensus? AI can assist — but it must not substitute.

Where AI Supports Therapy

Used wisely, AI has genuine value:

-

Wysa and similar apps offer emotional first aid for those on waiting lists.

-

VR platforms like gameChange (for agoraphobia) and Phoenix (for self-esteem) provide immersive exposure therapy.

-

Chatbots can assist with CBT structure and behavioural activation.

-

AI tools support training through interactive simulations and increase accessibility for neurodivergent and disabled trainees.

When AI Becomes Dangerous

Problems arise when AI is marketed as therapy. Generative AI can convincingly simulate dialogue, but it has no ethical compass. It doesn't understand trauma, power dynamics, or the moral responsibility therapists hold. It cannot rupture — or repair — a therapeutic alliance. Some users form real emotional attachments to bots, which can lead to destabilising transference.

The Human Core of Therapy

Clients don’t come to therapy for perfect advice — they come to be seen. The true value of therapy lies in presence: the ability to sit together in discomfort, to attune to what’s unspoken, to witness someone’s inner world. As one UKCP member put it, the best moments in therapy are often unpredictable, unrepeatable, and unforgettable. You won’t get that from an algorithm.

Integrating Ethical Guidance: Insights from Fiske et al.

A 2019 paper by Fiske, Henningsen, and Buyx outlines key ethical concerns around embodied AI in psychotherapy. They call for:

-

Robust data ethics and risk assessments

-

Regulatory oversight for chatbot services

-

Informed consent, especially for vulnerable users

-

AI training for therapists

-

Firm safeguards to ensure AI complements — not replaces — human care

They also highlight deeper risks: biased algorithms, over-attachment, transference, and a shifting understanding of what care and connection really mean.

What Therapists Need to Do Now

To meet this moment, we must:

-

Build AI literacy

-

Engage in ethical discussion and reflection

-

Support and participate in research

-

Advocate for robust data protection

-

Use AI as a tool — not a crutch

We also need strong guidance from professional bodies, and proactive regulation before commercial interests compromise clinical integrity.

Conclusion: Informed, Not Replaced

AI is here to stay. It can support us by increasing access, reducing admin burdens, and offering new tools. But it must not be mistaken for what it is not. The therapeutic relationship is not a product — it is a living, dynamic, human process.

We, as practitioners, must lead the conversation. Not from fear, but from insight. Not to stop AI, but to shape it.

Let us be informed — not replaced.

Questions for Reflection or Discussion

-

How should therapy services respond when clients use chatbots between sessions?

-

Where do we draw the line between digital support and real therapy?

-

Could AI deepen the therapeutic relationship — or risk disrupting it?

-

Are you prepared to answer client questions about AI tools?

-

Who is liable if a chatbot gives dangerous advice?

-

Are therapists being silently replaced — one chatbot at a time?

-

What does good CPD on AI and psychotherapy look like?

-

Can AI safely support clients dealing with trauma, grief, or suicidality?

-

Should your organisation develop a policy on AI use in therapy and training?

Interested in a thought-provoking webinar or public talk on AI and psychotherapy?

Pavol Gabaj offers dynamic, evidence-informed presentations on the ethical, clinical, and human challenges of AI in mental health.

📧 Contact: pavol@gabajtherapy.co.uk

📞 Phone/text: 07547 398306

🌐 Website: gabajtherapy.co.uk